ETL for Data Migration: The Ultimate Guide

You’d be pressed hard to find an online business that doesn't depend on at least one data platform. So when the time comes to upgrade to a more capable solution, you can’t just delete the data and start from scratch. You need to migrate data into a new environment without any loss.

That’s when ETL data migration enters the fray.

Feb 14 2023●6 min read

What is data migration in ETL?

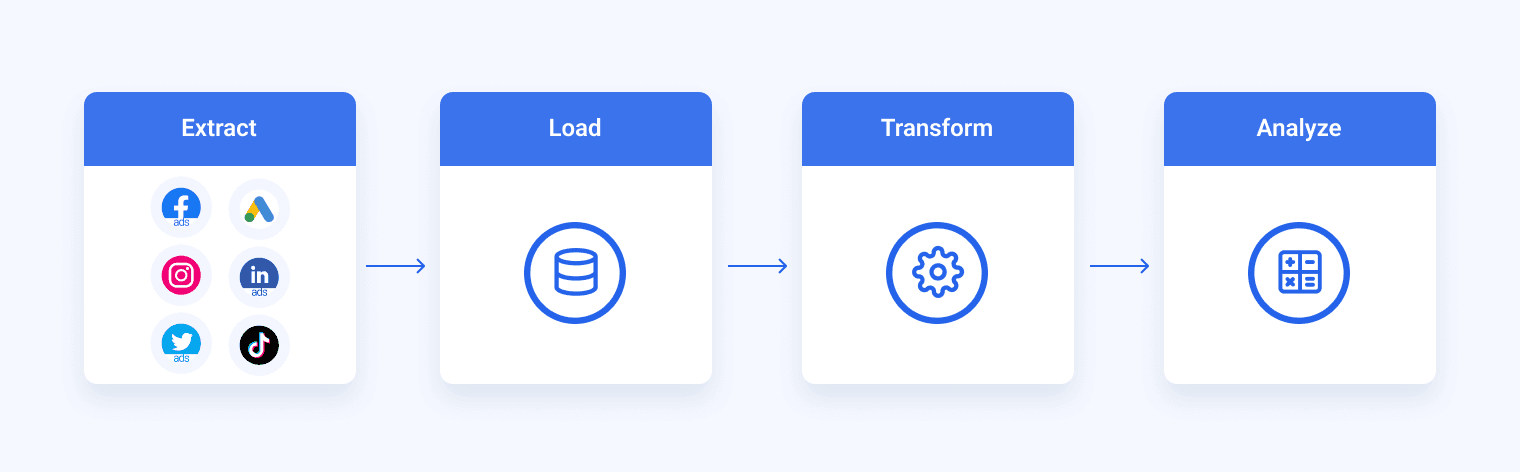

ETL data migration is the process of moving data from one source to another by extracting (E), transforming (T), and loading (L) data into target storage, typically a data warehouse. The process changes the data to meet specific rules and requirements.

When designing Whatagraph’s data migration feature, we wanted to automate all those adjustments.

This way small non-tech teams and marketing agencies that handle hundreds of clients can save the time needed to load data from multiple sources to Google BigQuery.

As a matter of fact, our data transfer process takes only a few clicks to complete, so anyone can do it.

No code knowledge or data engineering is required.

ETL vs. data migration vs. data integration

ETL

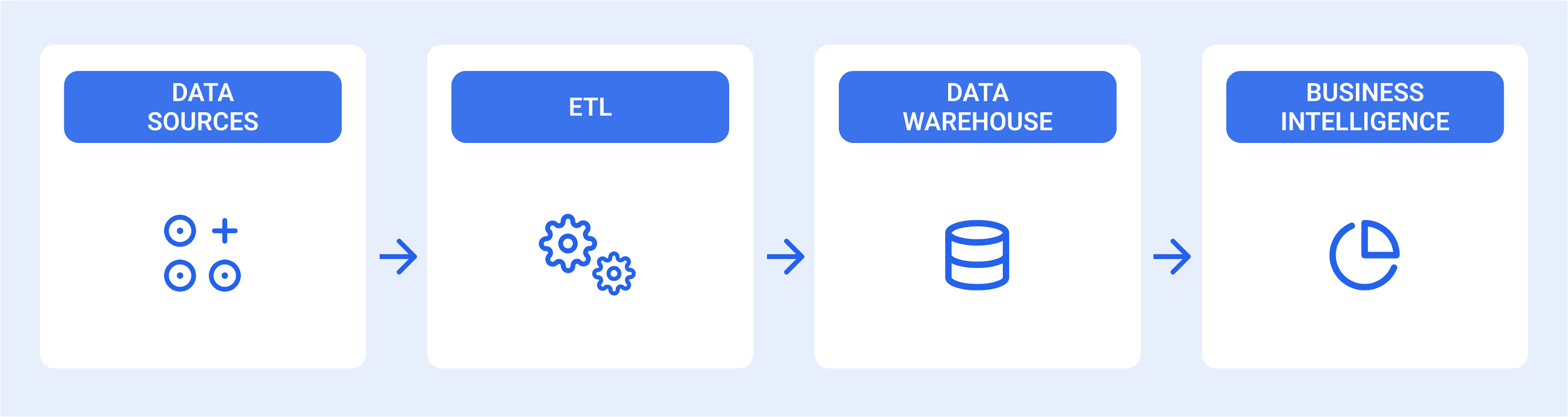

This three-step process is useful when you want to move data from multiple sources into a data lake or cloud data warehouses like Snowflake, Microsoft Azure, Google BigQuery, and Amazon Redshift data warehouse.

ETL process and data migration are somewhat related because they both involve data extraction and moving data from one source to another. However, data migration doesn’t include changing the format, while ETL does. That’s why there’s the word “transform” in its name.

Both ETL and data migration are used when businesses need to move the information they have, but data integration doesn’t transform data.

The data transformation aspect is the most important part of the ETL. To run analyses and draw actionable insights, data formats need to be standardized. This includes deduplication (eliminating redundant data), data mapping, data cleansing, verification, sorting, etc.

Data migration

Data migration is the process of moving data and metadata from a source system to a new system. In the context of ETL, data migration involves at least the extract and load steps, while it can also undergo minimum transformations, after which it can be loaded into a target system.

Organizations resort to data migrations for several reasons. They might need to overhaul an entire system, move from legacy systems to modern architectures, upgrade datasets, set up a new data warehouse, or merge new data from an acquisition. Data migration is also needed when deploying another system that exists alongside existing applications.

Data integration

Data integration refers to the process of combining data from different sources, such as on-premise and external systems and applications, into a single unified view for the purposes of more efficient data management, extracting meaningful information, and gaining actionable business intelligence.

Data integration is an essential part of the ETL data pipeline and includes data ingestion, data processing, transformation, and storage. The data integration process improves an organization’s data quality over time.

ETL data migration

ETL data migration is a cycle of extracting data from various sources, changing the data according to business requirements, and loading it into a target data set. It involves migrating information from one framework to another and making adjustments necessary for the user application.

The data migration process in ETL in 6 steps

Step 1: Scope the project

Scoping the project comes in handy either when you haven’t conducted a data migration before or the parameters of the project are unclear.

Draw up a plan that addresses critical areas of the project structure before the project starts. You should include:

- Stakeholders and their required deliverables

- System and migration expertise

- Communication plans and reporting requirements

- Budgets and deadlines

If you’re using an external provider to run the project, make sure to be clear about your own dataset because they might change requirements and incur additional fees.

Also, be clear about the size of the project and get a clear understanding of its scope before agreeing on costs with a supplier.

Step 2: Evaluate the resources

If you want a staged, well-managed and robust approach to data migration, you need a clear method. Assess the project and core migration process and consider incorporating standards into your project.

You can use these standards to identify problem areas early on and make sure you don’t head into the final stages with multiple issues to sort out.

For example, you need to evaluate the ETL tools available for data migration. Apart from the features, you need to ask:

- How flexible is your existing data migration tool?

- Does it match the skills of the people working on the project?

- Do you need assistance from data engineers to complete the project?

Choosing a resilient data migration platform will reduce reliance on external experts and boost the confidence of your project team.

Those are the very guidelines we had in mind when developing Whatagraph.

We wanted a tool that would simplify data transfers to the point & click and drag & drop level.

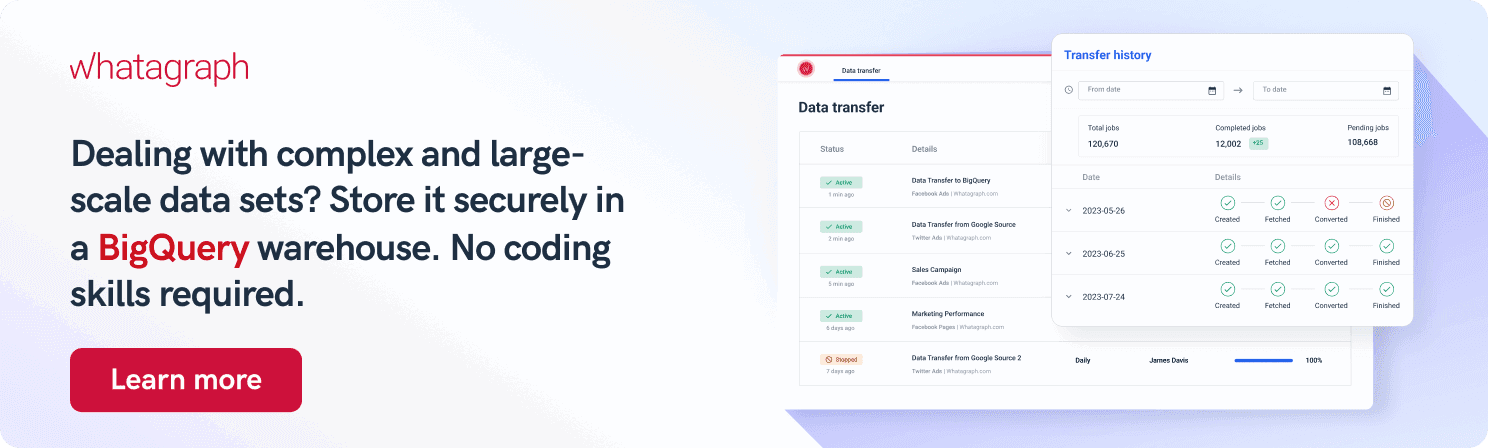

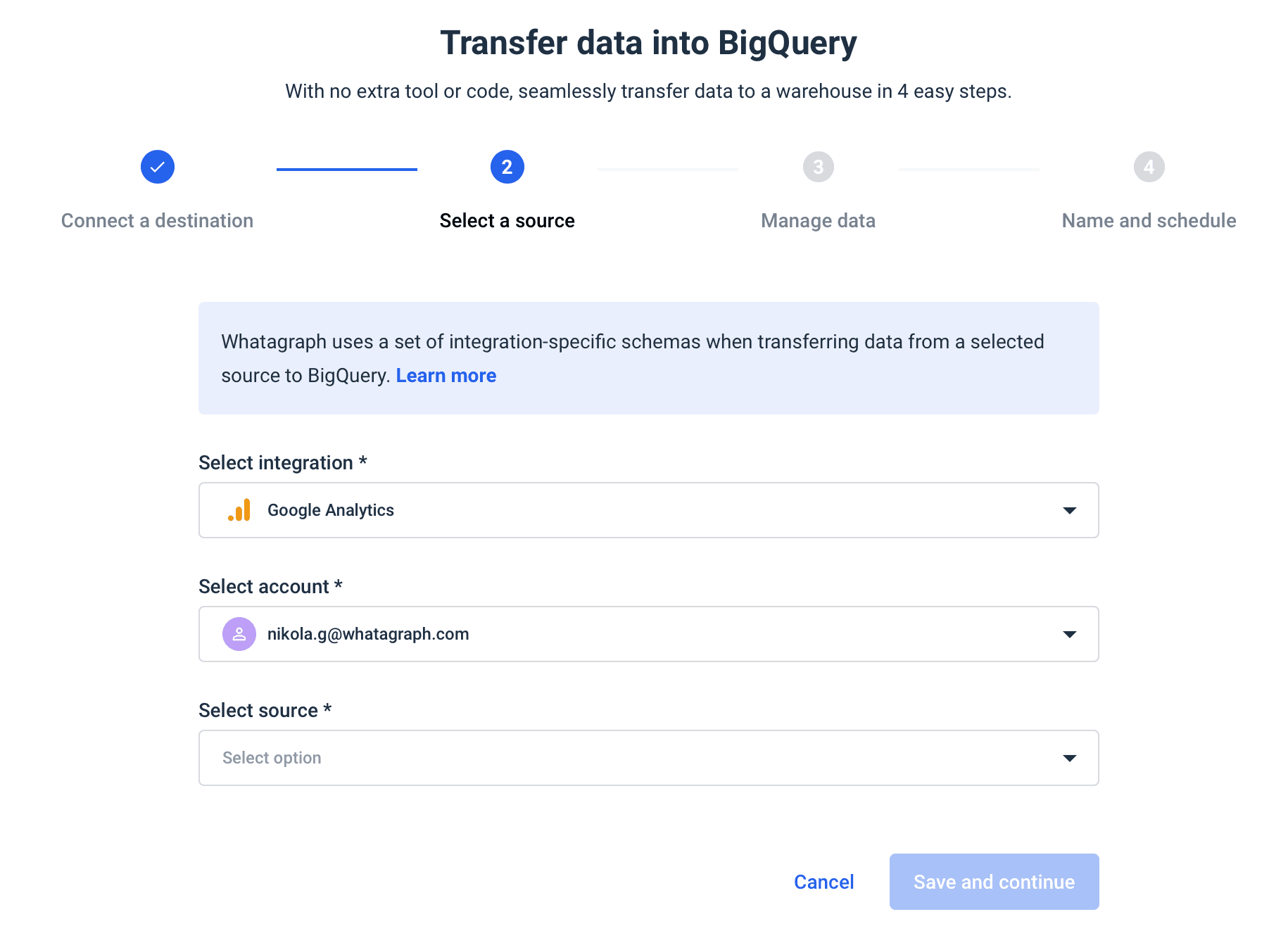

Whatagraph is an automated data pipeline that helps teams move large volumes of data from multiple marketing data sources to Google BigQuery.

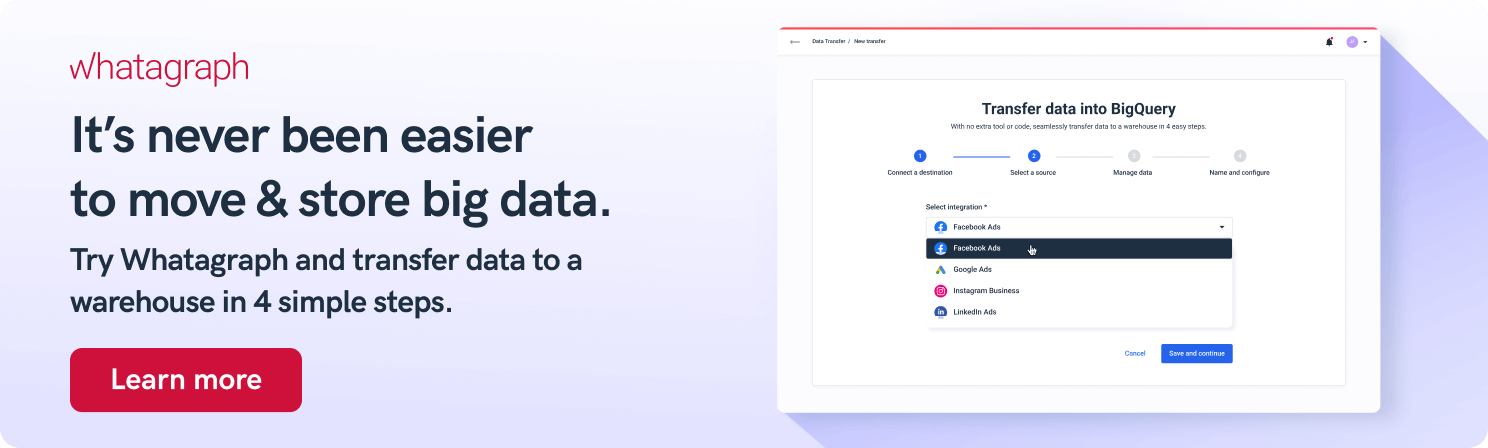

To use Whatagraph’s data transfer service to migrate your data, you don’t need any SQL knowledge. Using our platform, you can complete the data transfer in just four steps:

- Connect the destination

- Choose the integration

- Set the schema

- Schedule the transfer

And that’s that.

The process is the same whether you’re moving data from Google Ads or Instagram Business to BigQuery.

Apart from transfer automation, if you need to report on the data in a new location, Whatagraph offers captivating data visualization via interactive dashboards.

Step 3: Design the migration

It’s tempting to develop your data migration to just a bare minimum. But as you’ll be doing one implementation at a time, you need to get it right. A common tactic is to break the data into subsets and build one category at a time and test every time.

If your organization is undergoing a particularly large migration, it makes sense to build and test in parallel.

Step 4: Test the design

The testing design stage defines an overall test plan for all the migration stages. An initial overview should assess the tools, reporting, structures, and limitations involved with testing.

The overview also specifies how each stage will be tested at the unit level, followed by how the entire migration will be tested from start to end, depending on the workflow.

Unit test specifications will outline test groups, which in return contain individual tests for the particular area of the migration. Each test is then broken down into its component steps, including description and expected results.

Step 5: Develop

Data migration specialists use agile methodologies to develop a data migration project in stages. This has proven successful in migrations in which a large number of stakeholders are involved.

An agile, real-time approach that is visible across the teams guarantees that the risks are mitigated as soon as they occur. It also provides test data relatively early in the process.

Step 6: Execute

To test the go-live strategy, data migration teams often execute dry runs first. This approach allows you to make adjustments where necessary. Once you’re ready to go live, it’s best to implement the migration during the weekend to reduce disruption to the business process.

Alternatively, you can run the old and new systems simultaneously and transfer data piece by piece. If business goals allow, parallel migration is always less disruptive. It can increase the budget and timelines but allow your team to address any issues that occur with minimum downtime.

Conclusion

As we see, ETL is data migration evolved to include the transformation stage. Still, data migration will continue to exist as long as businesses need to move large datasets and as long as there’s economic value in storing information in the cloud.

The first step is to determine which business problems you want to solve. It might be a simple technical data migration from one system to another or a full-scale ETL project that will open up new business opportunities.

When designing Whatagrap as a one-stop data transfer tool, we wanted it to be easy enough to use by non-technical teams but also give it powerful compute to handle multiple connections for marketing teams that handle hundreds of accounts.

If you’re looking for an easy-to-use solution to connect data from various marketing sources, keep your marketing data safe, or want to visualize your BigQuery data, book a demo call and find out how Whatagraph can help.

WRITTEN BY

Nikola GemesNikola is a content marketer at Whatagraph with extensive writing experience in SaaS and tech niches. With a background in content management apps and composable architectures, it's his job to educate readers about the latest developments in the world of marketing data, data warehousing, headless architectures, and federated content platforms.